Thanks here is the Healthcheck: BTW I've tried PORTAINER_ENV 0-3 all return same error.

Checking your OliveTin-for-Channels installation...

(extended_check=true)

OliveTin Container Version 2025.07.21

OliveTin Docker Compose Version 2025.03.26

----------------------------------------

Checking that your selected Channels DVR server (192.168.0.51:8089) is reachable by URL:

HTTP Status: 200 indicates success...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 1276 100 1276 0 0 138k 0 --:--:-- --:--:-- --:--:-- 138k

HTTP Status: 200

Effective URL: http://192.168.0.51:8089/

----------------------------------------

Checking that your selected Channels DVR server's data files (/mnt/192.168.0.51-8089) are accessible:

Folders with the names Database, Images, Imports, Logs, Movies, Streaming and TV should be visible...

total 8

drwxr-xr-x 2 root root 4096 Jul 24 21:37 .

drwxr-xr-x 1 root root 4096 Jul 27 15:36 ..

drwxr-xr-x 2 root root 0 Jul 26 22:05 Database

drwxr-xr-x 2 root root 0 Jul 20 12:49 Images

drwxr-xr-x 2 root root 0 Jul 24 16:52 Imports

drwxr-xr-x 2 root root 0 Sep 5 2020 Live TV

drwxr-xr-x 2 root root 0 Jun 27 2022 Logs

drwxr-xr-x 2 root root 0 Oct 6 2023 Movies

drwxr-xr-x 2 root root 0 Jul 5 22:10 Streaming

drwxr-xr-x 2 root root 0 Jul 9 10:53 TV

Docker reports your current DVR_SHARE setting as...

/mnt/data/supervisor/media/DVR/Channels

If the listed folders are NOT visible, AND you have your Channels DVR and Docker on the same system:

Channels reports this path as...

Z:\Recorded TV\Channels

When using WSL with a Linux distro and Docker Desktop, it's recommended to use...

/mnt/z/Recorded TV/Channels

----------------------------------------

Checking that your selected Channels DVR server's log files (/mnt/192.168.0.51-8089_logs) are accessible:

Folders with the names data and latest should be visible...

total 4

drwxr-xr-x 2 root root 0 Jun 27 2022 .

drwxr-xr-x 1 root root 4096 Jul 27 15:36 ..

drwxr-xr-x 2 root root 0 May 12 04:24 comskip

drwxr-xr-x 2 root root 0 May 12 04:24 recording

Docker reports your current LOGS_SHARE setting as...

/mnt/data/supervisor/media/DVR/Channels/Logs

If the listed folders are NOT visible, AND you have your Channels DVR and Docker on the same system:

Channels reports this path as...

C:\ProgramData\ChannelsDVR

When using WSL with a Linux distro and Docker Desktop, it's recommended to use...

/mnt/c/ProgramData/ChannelsDVR

----------------------------------------

Checking if your Portainer token is working on ports 9000 and/or 9443:

Portainer http response on port 9000 reports version

Portainer Environment ID for local is

Portainer https response on port 9443 reports version 2.32.0

Portainer Environment ID for local is

----------------------------------------

Here's a list of your current OliveTin-related settings:

HOSTNAME=olivetin

CHANNELS_DVR=192.168.0.51:8089

CHANNELS_DVR_ALTERNATES=another-server:8089 a-third-server:8089

CHANNELS_CLIENTS=appletv4k firestick-master amazon-aftkrt

ALERT_SMTP_SERVER=smtp.gmail.com:587

ALERT_EMAIL_FROM=[Redacted]@gmail.com

ALERT_EMAIL_PASS=[Redacted]

ALERT_EMAIL_TO=[Redacted]@gmail.com

UPDATE_YAMLS=true

UPDATE_SCRIPTS=true

PORTAINER_TOKEN=[Redacted]

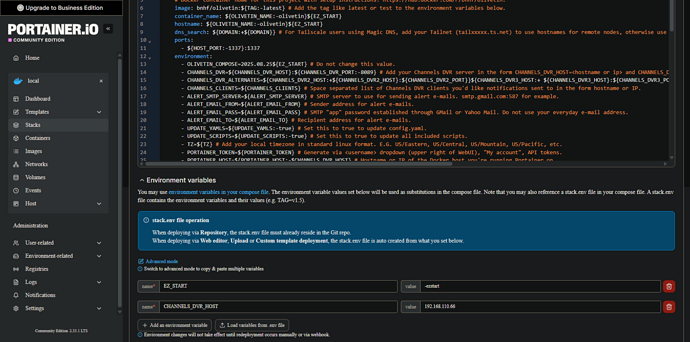

PORTAINER_HOST=192.168.0.20

PORTAINER_PORT=9443

PORTAINER_ENV=1

----------------------------------------

Here's the contents of /etc/resolv.conf from inside the container:

# Generated by Docker Engine.

# This file can be edited; Docker Engine will not make further changes once it

# has been modified.

nameserver 127.0.0.11

search lan

options ndots:0

# Based on host file: '/etc/resolv.conf' (internal resolver)

# ExtServers: [host(192.168.0.1) host(2603:7000:b500:15f8::1)]

# Overrides: []

# Option ndots from: internal

----------------------------------------

Here's the contents of /etc/hosts from inside the container:

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00:: ip6-localnet

ff00:: ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.19.0.2 olivetin

----------------------------------------

Your WSL Docker-host is running:

FIFO pipe not found. Is the host helper script running?

Run sudo -E ./fifopipe_hostside.sh "$PATH" from the directory you have bound to /config on your host computer

----------------------------------------

Your WSL Docker-host's /etc/resolv.conf file contains:

FIFO pipe not found. Is the host helper script running?

Run sudo -E ./fifopipe_hostside.sh "$PATH" from the directory you have bound to /config on your host computer

----------------------------------------

Your WSL Docker-host's /etc/hosts file contains:

FIFO pipe not found. Is the host helper script running?

Run sudo -E ./fifopipe_hostside.sh "$PATH" from the directory you have bound to /config on your host computer

----------------------------------------

Your WSL Docker-host's /etc/wsl.conf file contains:

FIFO pipe not found. Is the host helper script running?

Run sudo -E ./fifopipe_hostside.sh "$PATH" from the directory you have bound to /config on your host computer

----------------------------------------

Your Windows PC's %USERPROFILE%\.wslconfig file contains:

FIFO pipe not found. Is the host helper script running?

Run sudo -E ./fifopipe_hostside.sh "$PATH" from the directory you have bound to /config on your host computer

----------------------------------------

Your Windows PC's etc/hosts file contains:

FIFO pipe not found. Is the host helper script running?

Run sudo -E ./fifopipe_hostside.sh "$PATH" from the directory you have bound to /config on your host computer

----------------------------------------

Your Windows PC's DNS server resolution:

FIFO pipe not found. Is the host helper script running?

Run sudo -E ./fifopipe_hostside.sh "$PATH" from the directory you have bound to /config on your host computer

----------------------------------------

Your Windows PC's network interfaces:

FIFO pipe not found. Is the host helper script running?

Run sudo -E ./fifopipe_hostside.sh "$PATH" from the directory you have bound to /config on your host computer

----------------------------------------

Your Tailscale version is:

FIFO pipe not found. Is the host helper script running?

Run sudo -E ./fifopipe_hostside.sh "$PATH" from the directory you have bound to /config on your host computer

----------------------------------------```