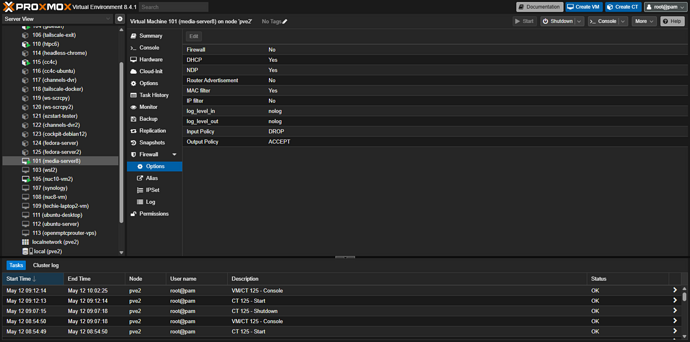

If you passthrough an iGPU to a VM -- it's dedicated to that VM. If you do the same with a CT, it can be shared with other CTs and Proxmox itself. You'd need to have multiple GPUs in order to have one available for the Proxmox console.

One approach you might consider is to have your Windows 11 VM as a Samba server using DrivePool, and install Channels DVR directly in a CT (with Chrome not Chromium for best tve compatibility). That way you can get the transcoding you're after, while still sharing the GPU with other CTs and the hypervisor.

Installing Channels in a Debian or Ubuntu CT is basically the same as installing it using either of those on bare metal.

So I ened up adding another user on the VM with a local account...had to use a different user name but it works now RDP and shares can be connected to

So I ened up adding another user on the VM with a local account...had to use a different user name but it works now RDP and shares can be connected to