There seems to be a group of us now using (or planning to use) Proxmox as a platform for everything Channels DVR related and beyond. As such, I thought it might be nice to have a thread dedicated to those that are on the path to "virtualizing everything" (or almost everything anyway). In addition, I'm probably not the only one using Proxmox 7 (for my Channels stuff), and is making the move to Proxmox 8 before support ends on 2024.07.31

In my case, I have the luxury of two identical 2U systems, so one can be production and the other lab. And in this case, I'm building out the lab system to become the production system, running Proxmox 8 and implementing a few changes from when I built my first Proxmox server around this time last year.

I'll add to this thread as I go, and do my best to document things to know, particularly as they relate to using Proxmox in a Channels DVR environment including the many great Docker-based extensions.

Installing Proxmox:

After installing Proxmox, I've had good success using the Proxmox VE Post Install script found here:

https://helper-scripts.com/scripts

This will set your repositories correctly for home (free) use, remove the subscription nag, and bring Proxmox up-to-date -- along with a few other things.

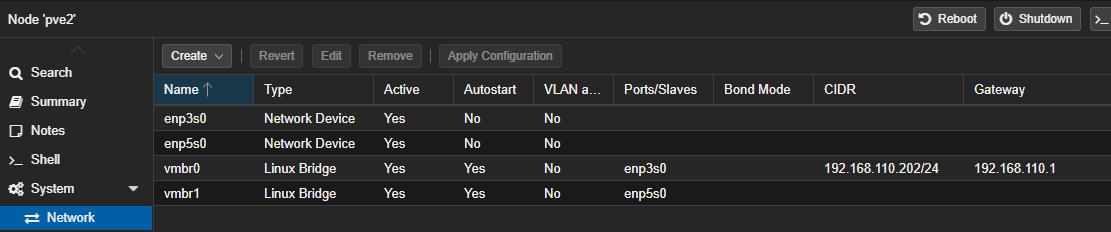

If you have a second Network Interface Card, which is a good idea in a Proxmox installation (either 2.5 or 10Gbps if possible), now's the time to add a Linux Bridge to the physical NIC, so it'll be available in your virtual environments. In my case, a 2.5Gbps Ethernet port is my "administrative port", and was setup during the installation. The 10Gbps PCIe card I installed, will be the card I use for my virtualizations. Here I've added a bridge called vmbr1 that's connected to my physical enp6s0 port:

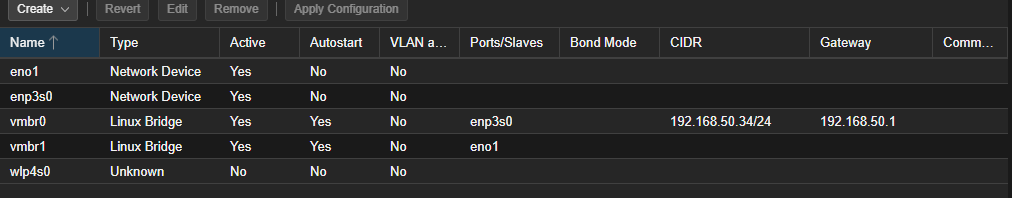

The setup for these bridges is very simple, as you typically don't specify anything except the bridge and port names:

If you're a Tailscale user, this is a good time to install it -- and also to emphasize that we'll be installing very few additional packages to Proxmox itself. Generally with a hypervisor like this, one wants to keep it as "stock" as possible, with any needed applications installed in LXC containers or Virtual Machines.

The Tailscale convenience script works nicely here:

with this command:

curl -fsSL https://tailscale.com/install.sh | sh

Execute a tailscale up followed by the usual authorization process.

Installing a Proxmox LXC for Docker containers:

To install an LXC container first thing you need to do is to download a template for it. This is done by clicking on "local" in the Proxmox WebUI, followed by "CT Templates". I like using Debian, as Proxmox and so many other things are based on it:

Go ahead and create your Debian CT, and use your best judgement on what resources to allocate based on what's available CPU, RAM and Disk-wise on your chosen hardware. Be aware it's OK to over-allocate on vCPUs, but not on RAM or Disk. You can go back and tweak settings later if needed.

Here's what I'm doing for my first LXC I'm calling "channels", as far as Resources and Options go:

I'll circle back later and setup the start and shutdown order once I have a few more virtualizations completed.

No need to start your LXC yet, as we're going to make a couple of changes to the "conf" file for this LXC to support Tailscale and to pass our Intel processor's integrated GPU through to have it available for transcoding.

Note the number assigned to your newly created LXC (100 in my case) and go to the shell prompt for your pve:

Change directories to /etc/pve/lxc, followed by nano 100.conf:

root@pve2:~# cd /etc/pve/lxc

root@pve2:/etc/pve/lxc# nano 100.conf

The last 9 lines above are the ones we're going to be adding to support Tailscale and Intel GPU passthrough:

For Tailscale (OpenVPN or Wireguard too):

lxc.cgroup2.devices.allow: c 10:200 rwm

lxc.mount.entry: /dev/net/tun dev/net/tun none bind,create=file

For Intel Quicksync:

lxc.cgroup2.devices.allow: c 226:0 rwm

lxc.cgroup2.devices.allow: c 226:128 rwm

lxc.mount.entry: /dev/dri/renderD128 dev/dri/renderD128 none bind,optional,create=file 0, 0

lxc.mount.entry: /dev/dri dev/dri none bind,optional,create=dir

lxc.hook.pre-start: sh -c "chown -R 100000:100000 /dev/dri"

lxc.hook.pre-start: sh -c "chown 100000:100044 /dev/dri/card0"

lxc.hook.pre-start: sh -c "chown 100000:100106 /dev/dri/renderD128"

Go ahead and start your LXC now, and you can quickly verify the presence of /dev/net/tun and /dev/dri:

root@channels:~# ls -la /dev/net /dev/dri

/dev/dri:

total 0

drwxr-xr-x 3 root root 100 May 25 13:01 .

drwxr-xr-x 8 root root 520 May 25 23:00 ..

drwxr-xr-x 2 root root 80 May 25 13:01 by-path

crw-rw---- 1 root video 226, 0 May 25 13:01 card0

crw-rw---- 1 root render 226, 128 May 25 13:01 renderD128

/dev/net:

total 0

drwxr-xr-x 2 root root 60 May 25 23:00 .

drwxr-xr-x 8 root root 520 May 25 23:00 ..

crw-rw-rw- 1 nobody nogroup 10, 200 May 25 13:01 tun

Go ahead and update your LXC's distro, and install curl:

apt update

apt upgrade

apt install curl

Now, install Tailscale in the LXC using the same convenience script we used for Proxmox. Do a tailscale up and authorize in the usual way.

Next, let's install Docker, also using a convenience script:

It's just two commands:

curl -fsSL https://get.docker.com -o get-docker.sh

sh get-docker.sh

And, to confirm installation

root@channels:~# docker version

Client: Docker Engine - Community

Version: 26.1.3

API version: 1.45

Go version: go1.21.10

Git commit: b72abbb

Built: Thu May 16 08:33:42 2024

OS/Arch: linux/amd64

Context: default

Server: Docker Engine - Community

Engine:

Version: 26.1.3

API version: 1.45 (minimum version 1.24)

Go version: go1.21.10

Git commit: 8e96db1

Built: Thu May 16 08:33:42 2024

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.32

GitCommit: 8b3b7ca2e5ce38e8f31a34f35b2b68ceb8470d89

runc:

Version: 1.1.12

GitCommit: v1.1.12-0-g51d5e94

docker-init:

Version: 0.19.0

GitCommit: de40ad0

Next, we'll install Portainer, and I'm going to use the :sts (Short Term Support) tag for this, as there's currently a slight issue with Docker and Portainer that affects getting into a container's console from the Portainer WebUI. Normally you'd want to use the :latest tag, and I'll switch to that once this issue is ironed-out:

docker run -d -p 8000:8000 -p 9000:9000 -p 9443:9443 --name portainer \

--restart=always \

-v /var/run/docker.sock:/var/run/docker.sock \

-v portainer_data:/data \

cr.portainer.io/portainer/portainer-ce:sts

You can now access Portainer at http://channels:9000 (assuming your LXC is named "channels" and you're using Tailscale with MagicDNS). If you need the IP, you can do a hostname -I from the LXC console to get it.

There's a clock on Portainer configuration, so you should go to its WebUI promptly and setup your admin password at least, or you'll need to restart the container to restart the initial config clock.

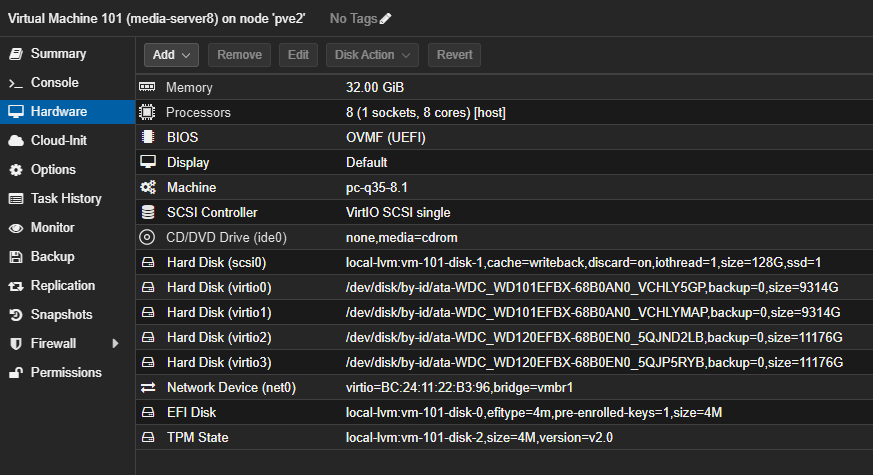

OK, so that's it for baseline configuration. I'll do some additional posts in coming days on setting up a Windows VM (in my case for DrivePool), along with adding Channels DVR and OliveTin as Docker containers.

So I ened up adding another user on the VM with a local account...had to use a different user name but it works now RDP and shares can be connected to

So I ened up adding another user on the VM with a local account...had to use a different user name but it works now RDP and shares can be connected to